What's New in Gradient Preview 6.4?

We released Gradient Preview 6.4 on Oct 15, 2019. It brings several new features and bug fixes:

- feature: inheriting from TensorFlow classes enables defining custom Keras layers and models

- feature: improved automatic conversion .NET types to TensorFlow

- feature: fast marshalling from .NET arrays to NumPy arrays

- bug fix: it is now possible to modify collections belonging to TensorFlow objects

- bug fix: enumerating TensorFlow collections could crash in multithreaded environment

- new samples: ResNetBlock and C# or Not

- train models in Jupyter F# notebook in your browser hosted for free by Microsoft Azure

- preview expiration: extended to March 2020

Contents

- Inheriting from TensorFlow classes

- Marshalling .NET collections to NumPy arrays

- ResNetBlock sample

- Not C# sample

- Azure Notebook sample with F#

- Conclusion

Inheriting from TensorFlow classes

With Gradient Preview 6.4 it is now possible to inherit from most classes in tensorflow namespace.

This enables defining custom Keras models and layers as suggested in the official

TensorFlow tutorial.

NOTE: In Preview 6.4 defining custom Model class requires every layer to be explicitly tracked

using a call to Model.Track function, so that TensorFlow could keep .layers collection in sync.

Failure to do so may cause training to fail at runtime. See it in the ResNet sample.

Here is an example of a custom composable model: ResNetBlock, that has 3 convolutional layers,

joined using batch normalization, and a “skip-connection” node, that connects input

directly to the output.

NOTE: BatchNormalization layer requires TensorFlow 1.14 to work properly. In 1.10 it is unstable.

public class ResNetBlock: Model {

const int PartCount = 3;

readonly PythonList<Conv2D> convs = new PythonList<Conv2D>();

readonly PythonList<BatchNormalization> batchNorms = new PythonList<BatchNormalization>();

readonly PythonFunctionContainer activation;

readonly int outputChannels;

public ResNetBlock(int kernelSize, int[] filters,

PythonFunctionContainer activation = null)

{

this.activation = activation ?? tf.keras.activations.relu_fn;

for (int part = 0; part < PartCount; part++) {

this.convs.Add(this.Track(part == 1

? Conv2D.NewDyn(

filters: filters[part],

kernel_size: kernelSize,

padding: "same")

: Conv2D.NewDyn(filters[part], kernel_size: (1, 1))));

this.batchNorms.Add(this.Track(new BatchNormalization()));

}

this.outputChannels = filters[PartCount - 1];

}

public override dynamic call(

object inputs,

ImplicitContainer<IGraphNodeBase> training = null,

IEnumerable<IGraphNodeBase> mask = null)

{

return this.CallImpl((Tensor)inputs, training?.Value);

}

object CallImpl(IGraphNodeBase inputs, dynamic training) {

IGraphNodeBase result = inputs;

var batchNormExtraArgs = new PythonDict<string, object>();

if (training != null)

batchNormExtraArgs["training"] = training;

for (int part = 0; part < PartCount; part++) {

result = this.convs[part].apply(result);

result = this.batchNorms[part].apply(result, kwargs: batchNormExtraArgs);

if (part + 1 != PartCount)

result = ((dynamic)this.activation)(result);

}

result = (Tensor)result + inputs;

return ((dynamic)this.activation)(result);

}

public override dynamic compute_output_shape(TensorShape input_shape) {

if (input_shape.ndims == 4) {

var outputShape = input_shape.as_list();

outputShape[3] = this.outputChannels;

return new TensorShape(outputShape);

}

return input_shape;

}

...

}

Functions like Model.call, overridden in the above sample have many overloads.

You have to override only the ones, that TensorFlow will actually call. If you failed to override

the proper one, you will get either AttributeError, or TypeError with message

“No method matches given arguments” at runtime, telling you which one is missing. This happens

before training begins, so remember to test your model on a small data sample

if you do heavy preprocessing. You might also need to define new overloads.

Marshalling .NET collections to NumPy arrays

Prior to Preview 6.4 creating a NumPy array from .NET array would copy elements one by one, and a custom conversion op would be performed for each one consuming both lots of time and memory.

In 6.4 we introduced an extension method .NumPyCopy() for arrays,

IEnumerable<T>, and ReadOnlySpan<T>, that copies data to TensorFlow accessible memory

very quickly. Unfortunately, we still do not support arrays over 2GiB because .NET runtime

does not support them. Please vote for large

Spans

and arrays support in .NET.

Example (from Not C# sample):

static ndarray<float> GreyscaleImageBytesToNumPy(byte[] inputs, int imageCount, int width, int height)

=> (dynamic)inputs.Select(b => (float)b).ToArray().NumPyCopy()

.reshape(new[] { imageCount, height, width, 1 }) / 255.0f;

ResNetBlock sample

This is a simple sample, demonstrating inheritance feature of Preview 6.4. It uses a simplified ResNet to solve FashionMNIST with fewer parameters.

Source code can be found in our samples repository on GitHub.

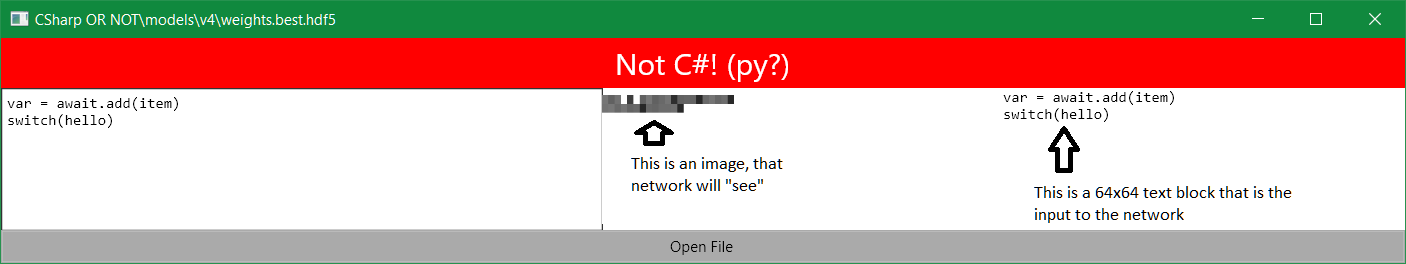

Not C# sample

This is an advanced sample, that demonstrates data preparation in C#, training a deep convolutional network, and consuming it from a cross-platform C# application.

The network detects programming language from a code fragment.

Full description of the sample is in a separate blog post.

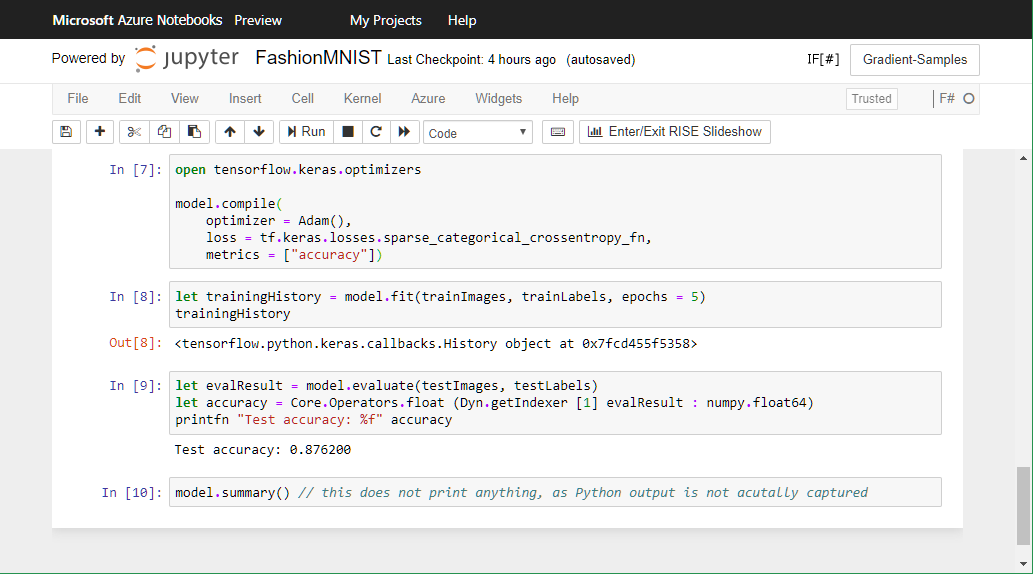

Azure Notebook sample with F#

This is a port of the FashionMNIST sample to Azure Notebooks, which allows training and running models for free in a Jupyter environment provided by Microsoft Azure.

View the notebook in the browser. (Clone if you wish to edit it; it will also enable syntax highlighting and autocomplete)

Conclusion

Try Gradient Preview 6.4 from NuGet or directly in your browser with F#.