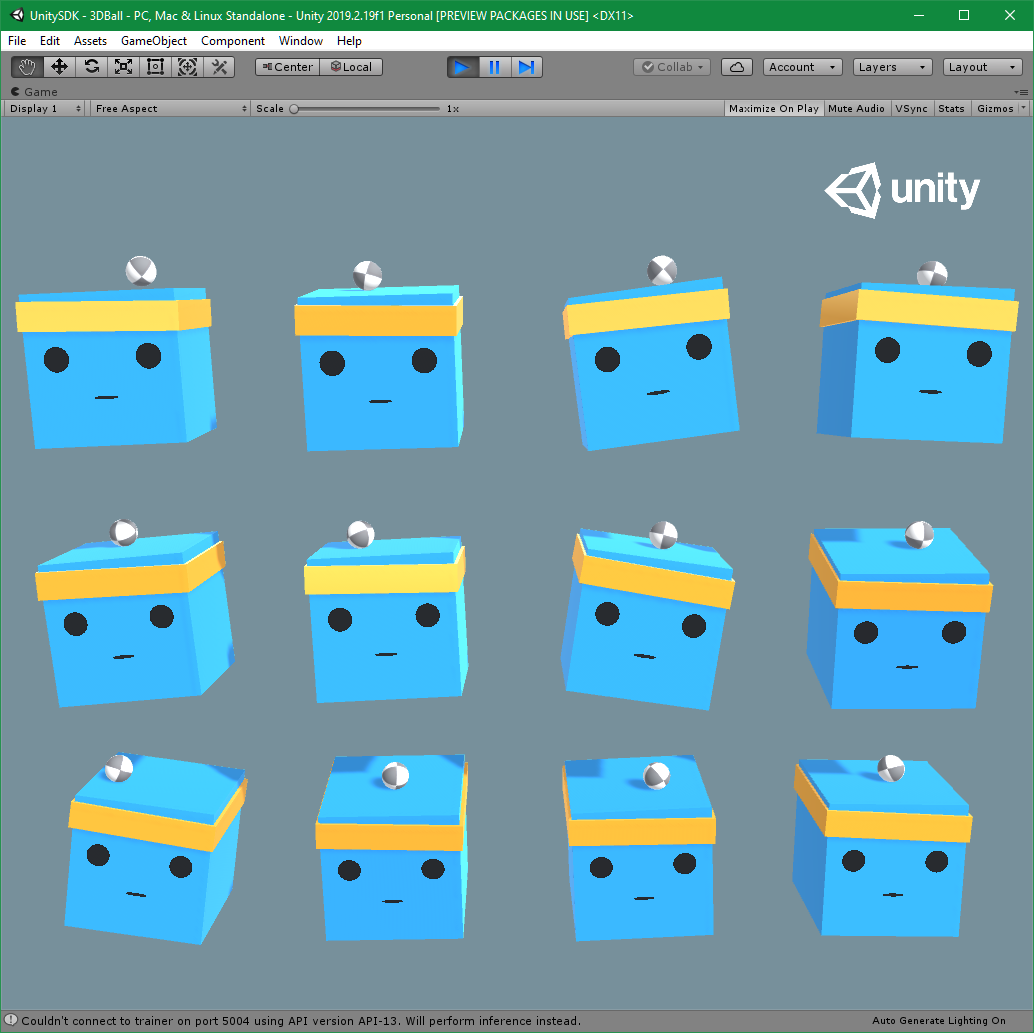

TensorFlow 2.4 Preview 1

We just released LostTech.TensorFlow 2.4 Preview 1 on NuGet. This is the first preview of our TensorFlow binding for .NET, that targets TensorFlow 2.x.

Take a look at TensorFlow official documentation to see what’s new in TensorFlow 2.x.

We also updated Samples to work with the Preview.

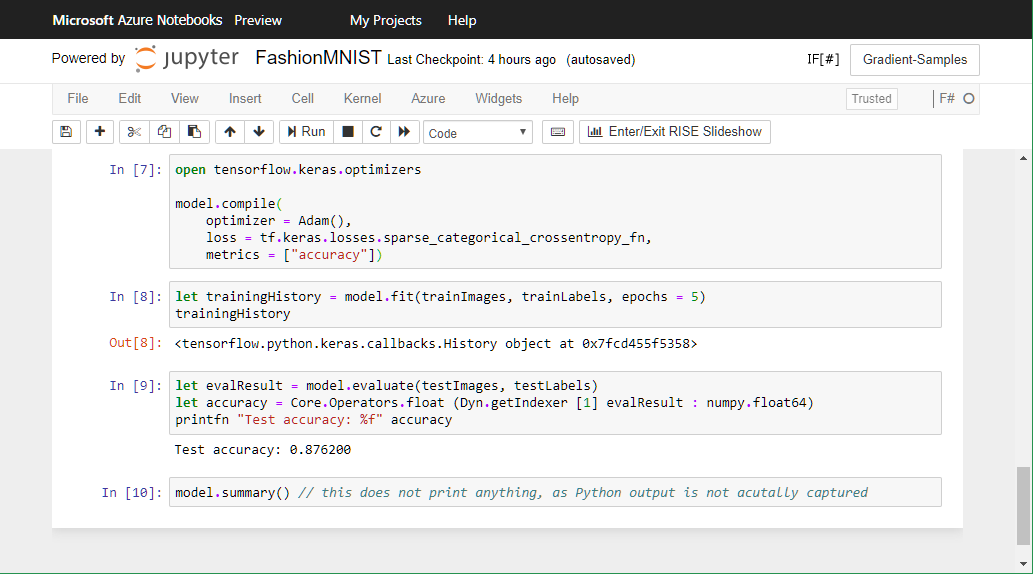

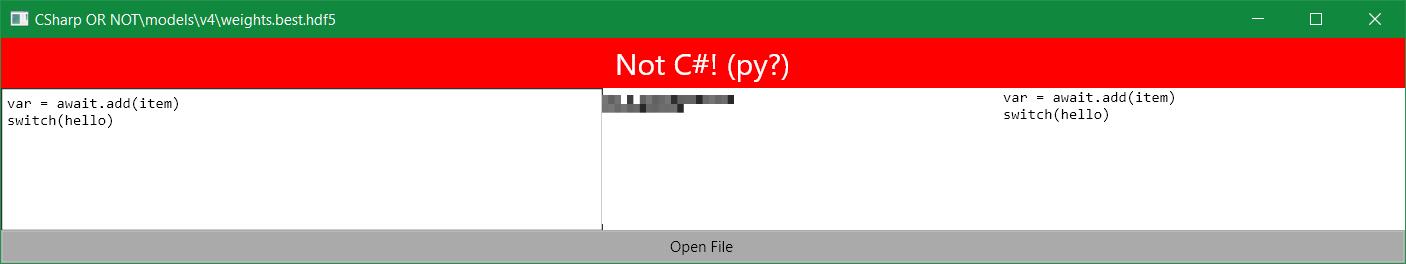

TensorFlow 2 by default works in eager execution mode.

Most samples, that use TF 1.x style require legacy

Session-based mode instead.

It can be enabled at the start of the program by calling v1.disable_eager_exection().

Legacy TF 1.x APIs are available in v1 class under tensorflow.compat.v1 namespace.